To create surveys that get meaningful insights, you need to start with a solid foundation: good survey questions. This may seem obvious, but it’s pretty important for your overall survey success. When questions are worded well, formatted correctly, and carefully considered from all angles, it paves the way for higher response rates—and a better chance of getting high-quality data that’ll help your business thrive.

What makes a survey question good?

Effective survey questions are defined by several key characteristics, so it’s worth asking:

Are your questions clear?

The wording of a question should be specific, easy to understand, and unambiguous. Double-barreled questions, double negatives, and jargon are just some of the roadblocks to clear questions.

Are your questions neutral and unbiased?

The way you ask questions has a huge impact on the quality of responses you receive. Leading questions, loaded questions, or opinion-based questions will muddy your results.

Are you using the right question types for your needs?

We get it, there are a lot of different question types and you may not always know which one you should use. But getting it right means creating a better survey experience where the questions are clear and the results are exactly what you need.

Does your survey design support your survey questions?

Wording isn’t everything. Good questions are worded well and enhanced by thoughtful survey design. This includes skip logic, answer choice randomization, and careful utilization of required questions.

Turn feedback into action

Send surveys with confidence. Tap into our expert-written templates, explore our Question Bank, and collect valuable insights.

When survey questions aren’t good there can be major consequences for your results. Confusing questions might cause your respondents to experience survey fatigue, which can lead to abandoned surveys or inaccurate responses. And if your respondents believe you’ve inserted bias throughout your survey or bombarded them with leading questions, they may develop a negative view of your company and change how they would naturally respond. Either way, you’ll end up with survey responses that aren’t necessarily thoughtful, honest, or valuable.

Wondering what you can do to guarantee good survey questions? Well, you can always count on the SurveyMonkey Question Bank to automatically supply customizable questions based on best practices. Our survey templates come fully stocked with expert-written questions across a wide range of categories, like market research, customer satisfaction, product testing, and more. You can also use SurveyMonkey Genius® to get recommendations on the best question type to use—it'll even detect issues with survey structure or question formats.

But beyond those go-to resources, there are some things to keep in mind as you create your surveys. Let’s go over a few types of survey questions and tips for better questions.

Open-ended questions

Want to hear from respondents in their own words? You’ll need an open-ended question, which requires respondents to type their answer into a text box instead of choosing from pre-set answer options. Since open-ended questions are exploratory in nature, they invite insights into respondents’ opinions, feelings, and experiences. In fact, good open-ended questions will often dig into all three and serve as follow-ups to previous closed-ended questions.

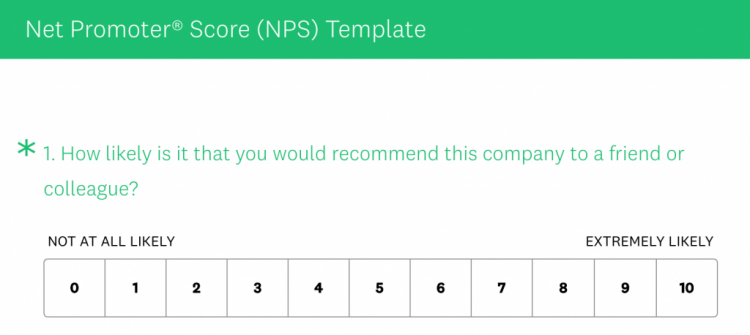

You can see this in action in our Net Promoter Score (NPS) survey template. After respondents answer the closed-ended NPS question of how likely it is that they would recommend a company to a friend or colleague, they encounter one of these open-ended questions:

- What changes would this company have to make for you to give it an even higher rating?

- What does this company do really well?

Which open-ended question the respondent sees depends on how likely they are to recommend the company—but both questions are an opportunity for marketers to get a better understanding of their company’s NPS score and more detailed information about customers’ likes or dislikes.

Another good use of open-ended questions is as a catch-all final survey question. Just think of how many times you’ve seen an online survey end with a question like “Do you have any other comments, questions, or concerns?” (You can find it in our customer experience survey template, among others.) This is a great question to close things out with because it gives respondents a chance to provide additional information or share details or concerns that weren’t covered by previous close-ended questions. Best of all, it can offer a ton of new insights and opportunities for further research. For instance, if respondents use the open-ended text box to voice their concerns about your product’s accessibility, something you hadn’t considered as a customer experience pain point, then you know that’s an issue that should be addressed.

Remember, open-ended questions are about quality, not quantity. They take more time and effort for respondents to answer, so you should try to limit how many you include. In general, it’s a good idea to cap it at two open-ended questions and, if possible, put them on a separate page at the end of your survey. You should also be careful about requiring responses to open-ended questions, especially if they’re sensitive questions and aren’t following up on a previous closed-ended response. If you force respondents to answer an open-ended question that they feel is irrelevant or intrusive, they might make something up or respond with gibberish just to move on with the survey.

Closed-ended questions

Closed-ended questions present respondents with a fixed list of answer choices. They may lack the freedom of open-ended questions, but they are designed to collect conclusive answers and quantifiable data. Think of the difference between being asked which of 3 specific restaurants you’d like to eat dinner at, versus being asked the open-ended question “where do you want to go for dinner?”

Closed-ended questions can come in many forms, including multiple choice questions, Likert rating scales, drop down, yes/no questions, ranking questions, or checkboxes. Choosing the right close-ended question type depends on what information you’re trying to uncover. Maybe you’re asking a demographic question and want to give respondents the ability to check all answer choices that apply. In that case, you’ll need to present your answer choices in checkmark format. If you’d rather ask about demographics in a multiple choice question format, be sure to include a “fill in the blank” answer option for respondents to add their own answer. This “fill in the blank” trick is a great solution if you’re ever concerned that none of your answer options will apply to your respondents.

Because closed-ended questions are based on pre-set answer options, it’s critical that you consider the respondent experience and how your wording might be interpreted (or misinterpreted). Good closed-ended questions don’t overstep or overreach. Here are three examples of bad closed-ended questions for an employee engagement questionnaire:

- Our company culture is frequently rated one of the best in the industry. How would you rate our company culture? (rating scale)

- Management within my organization communicates well and recognizes strong job performance. (Likert scale: strongly agree → strongly disagree)

- When you collaborate with employees in other departments, how satisfied or dissatisfied are you with the level of communication? (Likert scale: extremely satisfied → extremely dissatisfied)

The first is an example of a leading question that’s guiding the respondent to answer in a certain way. The second is a double-barreled question, meaning it’s referencing more than one issue or topic but only allows for one answer. In this case, a respondent may feel that management typically recognizes a job well done but doesn’t necessarily communicate well overall.

The third question is making a few mistakes. It assumes that the respondent regularly collaborates across departments. It also doesn’t specify what kind of communication it means—email, Slack messaging, verbal? If the survey has already used screening questions or skip logic to determine the respondents’ collaboration habits, then this isn’t a problem. If not, this kind of assumptive question may not get the accurate insights that the survey creator wants.

To ensure good closed-ended survey questions, keep the respondent experience top of mind. Preview your survey from their perspective and pay particular attention to your questions’ wording and format.

Rating scale questions

Under the umbrella of closed-ended questions, you’ll find rating scale questions (sometimes called ordinal questions). They use a scale from any range (often 0 to 100 or 1 to 10) and ask respondents to select the number that most accurately represents their response. NPS questions are a good example of rating scale questions, since they use a numerical scale to find out how likely customers are to recommend a company’s product or service.

Here are a few more examples of rating scale questions:

- How would you rate our customer support on a scale of 1-5?

- How likely is it that you would recommend our website to a friend or family member?

- How would you rate the instructor of today’s workshop?

So where can you run into trouble with rating scale questions? One common pitfall is not including the context that respondents need to fully understand the question. For example, say you asked respondents “How likely is it that you would purchase our new product?” If you don’t properly explain the value of the numbers on your scale, they may not know which answer option is right for them.

Another thing to look out for is what kind of rating scale question you’re using. Star rating and likert scale questions (more on those below) are both types of rating scales. Star rating questions are a classic way to ask respondents to rate a product or experience. (With SurveyMonkey, you can even go beyond stars and choose from hearts, thumbs, or smiley faces for this type of question.) You probably see them all the time in your daily life: product reviews, 5-star hotels, restaurants with Michelen stars, customer service ratings, or even teacher evaluations.

But star rating scales are only appropriate for some questions, and they can be especially confusing if not labeled clearly. Let’s go back to that classic NPS question “How likely is it that you would recommend this company to a friend?” If you asked the same thing with the answer options in star format it wouldn’t be as clear as the typical numerical scale, and would likely throw respondents off.

Likert scale questions

Likert scales are a specific type of rating scale. They’re the “agree or disagree'' and “likely or unlikely” questions that you often see in online surveys and they’re used to measure attitudes and opinions. They go beyond the simpler “yes/no” question, using a 5 or 7-point rating scale that goes from one extreme attitude to another. For example:

- Strongly agree

- Agree

- Neither agree nor disagree

- Disagree

- Strongly disagree

The middle-ground answer choice is important for Likert scale questions (and rating scale questions in general). Good Likert scale questions are all about measuring sentiment about something specific, with a deep level of detail. To do that, you need an accurate and symmetrical scale that allows for measuring neutral feelings as well.

What else makes a good Likert scale question? Accuracy is up there; the more specific you can be about what you’re asking, the better data you’ll get. For instance, rather than simply asking a broad single question about event satisfaction in a post-event questionnaire, you could use separate questions to dig into respondents’ satisfaction with the speakers, the registration process, the schedule, the venue, and more. That way, you can zero in on the nuances of their experience and get a richer understanding of your event’s successes and areas in need of improvement.

Good Likert scale questions are also deliberate in how they use adjectives. It should be very clear which grade is higher or bigger—you don’t want your respondents to puzzle over whether “pretty much” is more than “quite a lot.” If descriptive words aren’t in an understandable order with logical measurements, your results won’t be as accurate.

Here’s what we recommend when it comes to the answer options of a Likert scale:

- Bookend your scale with extremes that use words like “extremely” or “not at all.”

- Make sure the midpoint of your Likert scale is moderate or neutral, with choices like “moderately” or “neither agree nor disagree.”

- For the rest of your answer options, use very clear descriptive terms like “very” or “slightly.”

It’s also best to keep your Likert scale ranges focused on one idea. For example, for a question about someone’s personality, it’s better to use a scale that ranges from “extremely outgoing” to “not at all outgoing,” rather than a scale that ranges from “extremely outgoing” to “extremely shy.” This is called a unipolar scale, which means that the two ends of the scale are opposites. In this case, it’s helpful for a respondent who might consider themselves the opposite of “extremely outgoing” but doesn’t feel that “extremely shy” is right for the other end of the spectrum. Unipolar scales present concepts in a way that is easier for people to grasp. They are also more methodologically sound, which is great news for the data you collect.

Keep labels in mind when working with Likert scale questions. From their clarity and specificity to their unipolar connection, labels are oftentimes the key to making sure respondents understand what is being asked and how their attitude or experience fits within the answer options.

Good questions are one of the top ingredients for good surveys. That’s why it’s so important to prioritize well-written questions and view your entire survey from your respondents’ perspective. Keeping an eye out for things like bias, accuracy, and consistency will lead to better questions—and better questions lead to game-changing results you can use throughout your business.